Passionate hunter and Microsoft employee Christian Heidl had an idea: Would an artificial intelligence be able to observe and recognize individuals of wild animals? The AI findings should subsequently be used not only to prepare the hunting schedule and forecast the possible damage to new plantations but also to analyze pandemic anomalies. So he turned to the AI team at Arineo GmbH. At that time, the team led by Dr. Gerhard Heinzerling and Dimas Wiese was busy developing a smart city traffic census for the city of Göttingen. They found that the two projects complement each other well. Also, both would be based on image augmentation and segmentation.

So the task for the government-funded AI researchers was to program an artificial intelligence that recognizes and correctly classifies deer in images from different cameras. The aim was to record the migratory behavior of the animals, evaluate it, and use it. They set to work, collected images of deer and trained their algorithms. The result: As part of a feasibility study, the team was able to prove that animal observation with AI algorithms is not only possible but more importantly, accurate enough for scientific purposes.

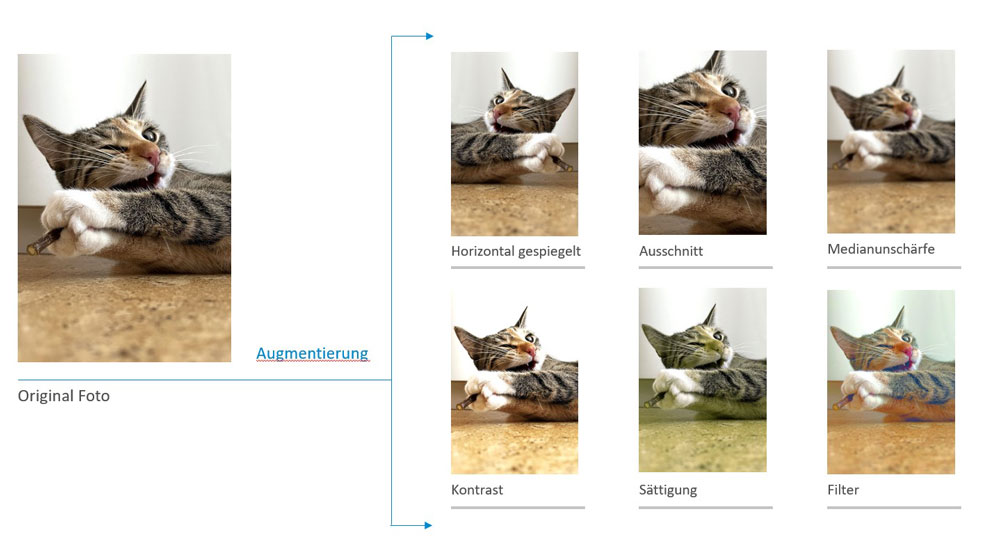

Image Augmentation

Augmentation is the term used to describe an AI process that creates a variety of image variants from an original image – for example, by mirroring, cropping, or adding additional image layers such as rain. These artificially generated images can be used to train AI algorithms particularly efficiently. This is because the number of images that artificial intelligence needs to train is often difficult to obtain without image augmentation.

Gerhard, Dimas, how did you tackle the problem of deer recognition?

First, with the active support of our working students Christin Müller and Sebastian Kampen, we collected as many pictures of deer as possible and used them to train object recognition. You may already be familiar with these from other applications such as face or gesture recognition in cell phone cameras. With the help of this object recognition, we were able to sort out all images that did not show deer. If there were deer in the pictures, we cut them out. The goal: the image should contain as much deer as possible.

What did you do with the pictures then?

Next, we performed a so-called semantic segmentation on it. This allows the background to be hidden, so that trees and meadows, for example, are no longer taken into account.

And with these images you can then search for similar images?

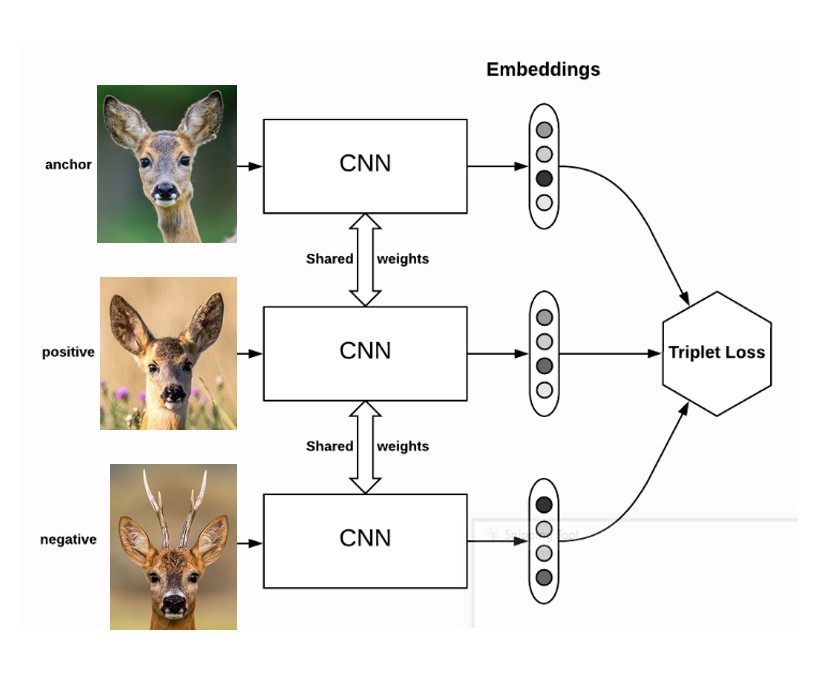

If one has an image of a deer and wants to find that specific animal from a set of further deer images, the characteristics of certain features are first determined for each image using an AI algorithm. These feature characteristics are ultimately a list of numbers that indicate, in coded form, the exact appearance of the respective doe nose, doe ear, body shape, etc. If the feature characteristics of two images are particularly similar to each other, they are likely showing the same individual deer.

But we went one step further and tried to find certain features in the pictures like noses, or ears. We were then able to use these more abstract features to find the same animals in other images from other cameras. Ultimately, this is where the real difficulty of the task lies, as the animals may have been recorded at different times of the day and night.

You’ll have to explain that to us in a little more detail. How do you find out the similarity?

We find the image similarity by training a special network. This network is supplied with three images. First, we need an “anchor” image: this is the deer that needs to be recognized. This “anchor” image is supplied with a second image of the same deer, which we call “positive”, and a third image of a similar, but still easily distinguishable deer. We call this “negative”. If you train such a network with enough images, the network learns to recognize the same deer on different images.

So in this way you can find all the images that show a particular deer. But what if instead, you are interested in the total number of individuals in all of the images? Can this also be determined?

Yes, this is possible in a very similar way. For this purpose, the similarities of each image to all other images are initially determined. With the help of these similarities, the images can then be divided into clusters. You sort of isolate the individual deer in the images. Within a cluster, the features of the deer in the images are very similar and probably show the same individual. In contrast, the images from other clusters show deer with differing features. The number of clusters is then equal to the number of individual deer in all of the images.

Could the procedure be applied to other species?

In principle, this procedure can be applied to the individuals of any visually distinguishable species. It is always important that the quality of the images also allows the decisive features to be recognized. And some considerable effort must first be put into creating a data set to train the AI algorithms. This is where image augmentation often comes into play. This is because an algorithm optimized to determine the similarity of individual deer cannot be used directly to recognize, say, raccoons.

And are there already interested parties for an application like this?

Yes, there are. For example, the University of Göttingen, the Nuremberg Zoo, and the Alpine Club. They want to use our artificial intelligence to reduce the manual effort involved in viewing and sorting camera images – and, thus, get research-relevant data more quickly.

Gerhard, Dimas, thank you very much for the interview.